#modeling #testing #generation #integration

(excerpt in German available as part of article on Lean Quality Management in German Testing Magazin)

Abstract

Concept, code, and tests tend to drift apart in larger or longer running projects. A lot of time and money is wasted to keep them aligned and yet to a bad degree. With agile working models and higher frequency of deployment cycles this gets even worse.

Two main reasons are identified:

- hands-on working style with insufficiently connected tools

- missing refactoring capability for concepts and tests (compared to code where we have learned to love powerful IDEs)

In order to keep concept and code aligned I have presented model-driven system documentation in 2018, see Ni2018 in Publications.

Today I will present model-driven test generation extending that successful alignment even further.

After having motivated model-driven test generation, I will present a little journey that many of us work with, probably on a daily basis. I will start with an epic, manually created by some Product Owner in a well known tracking tool named Jira. Via interface this epic is pushed to a system model in MagicDraw. There, a business analyst describes the impact of the epic on already existing use cases. Then, VelociRator, the model-driven test generator, produces tests from modeled behavior. These tests are finally pushed back to Jira and linked to the initial epic closing the loop with a neat test coverage on the epic.

An outlook on model-driven test generation for automation will give a hint on how to combine test generation and test automation. Finally, I will conclude with why model-driven test generation saved our back during COVID-19.

How does it work?

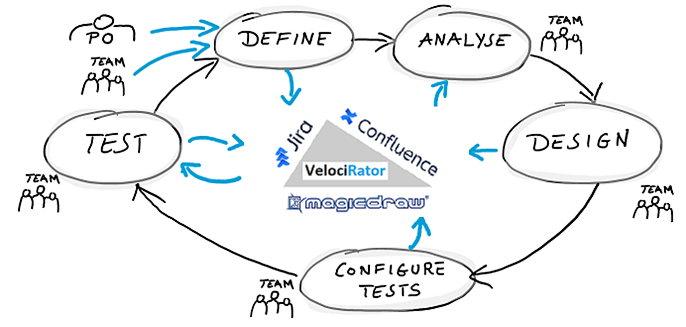

The following figure shows the journey in phases around involved tools in the middle.

Now, let’s repeat the quick journey taken above with a more detail.

Define

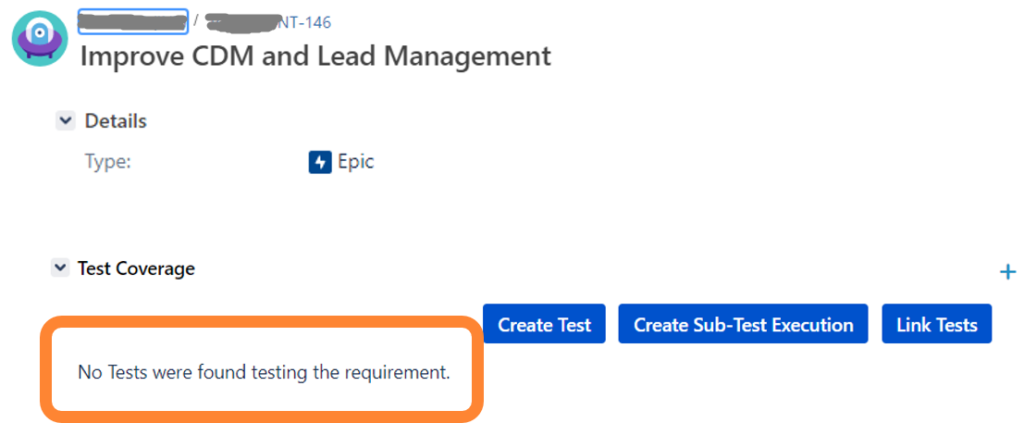

As PO I create a new issue like an epic in Jira. Let’s name it “Improve CDM and Lead Management”.

Initially, the test coverage is empty. After assigning the issue to the team or some analyst of a team, the epic is pulled into a model.

Analyse

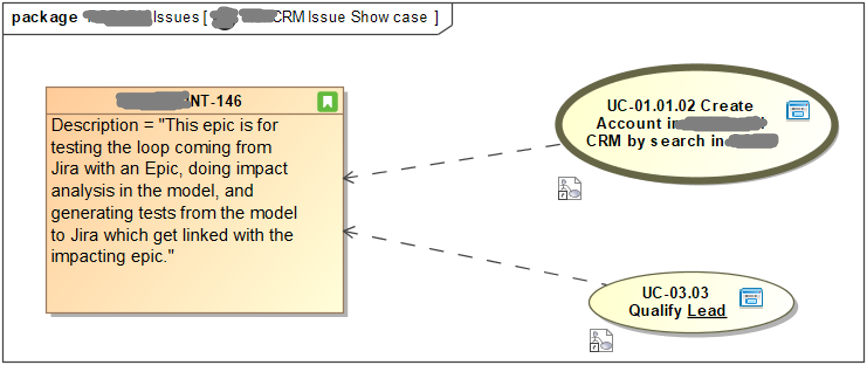

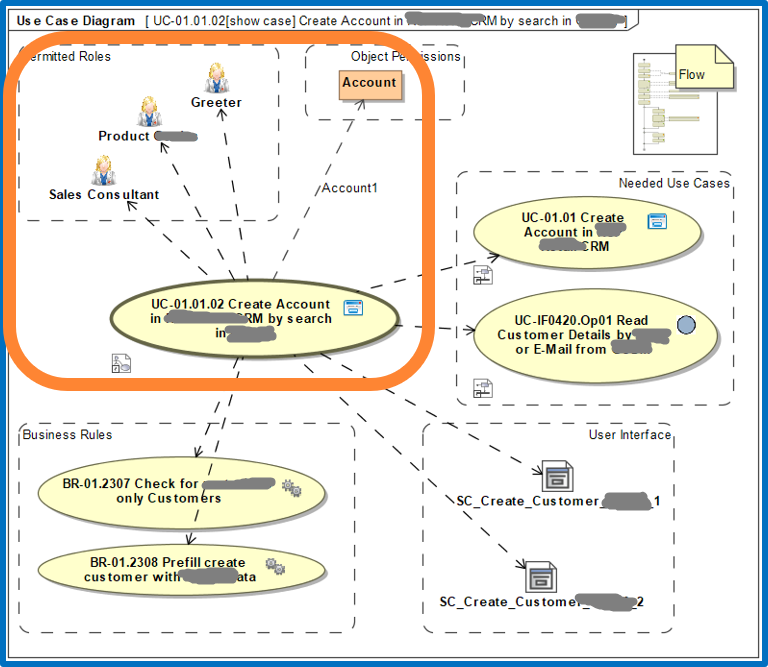

The model is our digital twin of the system under design. It contains system use cases describing the logical interaction of users with the system for a set of scenarios.

The analyst identifies which of our use cases are impacted by the new requirement by drawing dependencies from the use cases to the issue.

Optionally, it is also possible to use change elements documenting what has to be changed and why.

Design

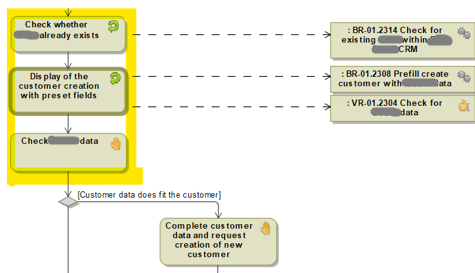

Next step is to dive into impacted use cases and change these accordingly.

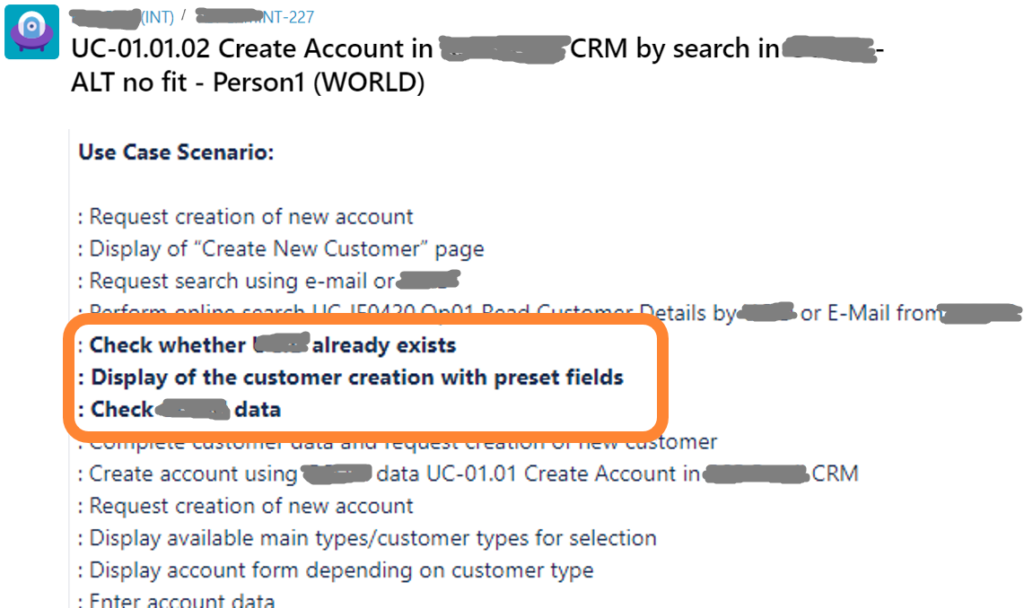

The designer checks the steps in the workflow with associated rules, documentation, and expected results. Since testers, designers, and developers do their work based on those workflows, all relevant information is documented here. To the upper left we have highlighted a few steps so that we can easily find them in generated tests lateron.

In addition, but not technically necessary, it is also possible to document the external view as some kind of micro architecture per use case.

Configure Tests

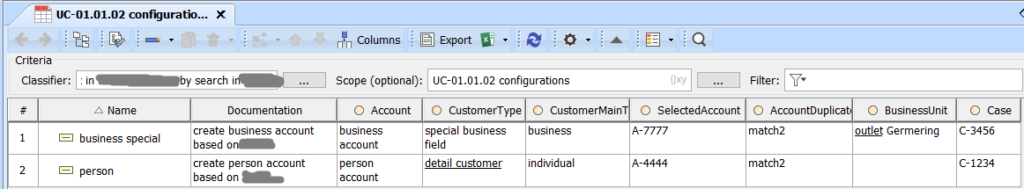

Next, the tester configures the tests inside the model by providing values to test parameters of selected test paths.

Each row is a test configuration and has name and documentation. All other columns represent test parameters like Account, CustomerType, and so on. When the test generator is switched on, it picks each selected test path and generates a test for each row.

Test

Back in Jira, the tester selects a generated test like the following.

The name of the test is constructed from the name of the use case, the chosen alternative (ALT no fit), and the selected test configuration (person1). As part of its description you get a compact use case scenario. You also get full test details including test data and expected results per step.

Closing the Loop

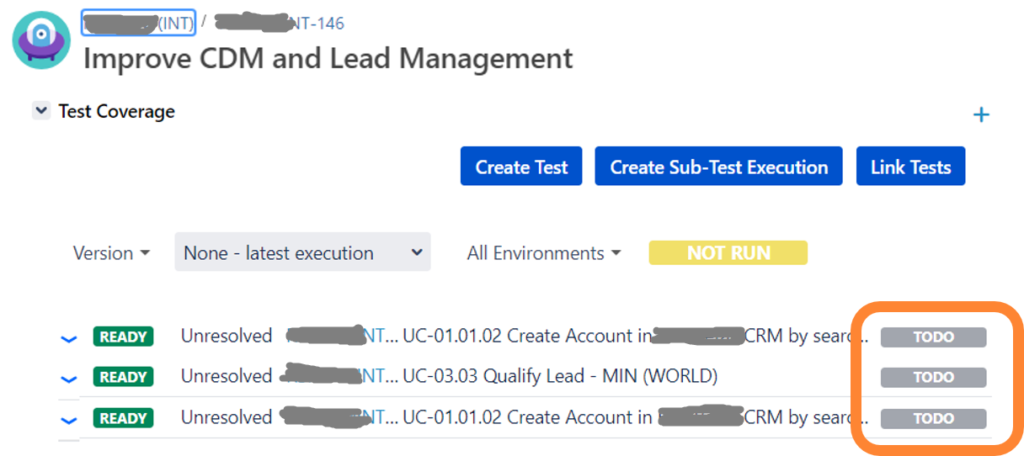

Since the generated tests can be automatically linked to the originating epic, you also get a predefined test coverage.

If the tester now chooses to execute one or all of the tests, their results will be immediately reflected in the test coverage. Therefore, the PO can easily follow progress, too.

Presentation

See presentation below

One response to “Model-Driven Test Generation with VelociRator”

[…] Ein Teil des Artikels beschäftigt sich mit der automatisierten Erstellung von Testfällen und verweist auf meinen Artikel Model-Driven Test Generation with VelociRator. […]