#architecture #clarity #velocity #direction

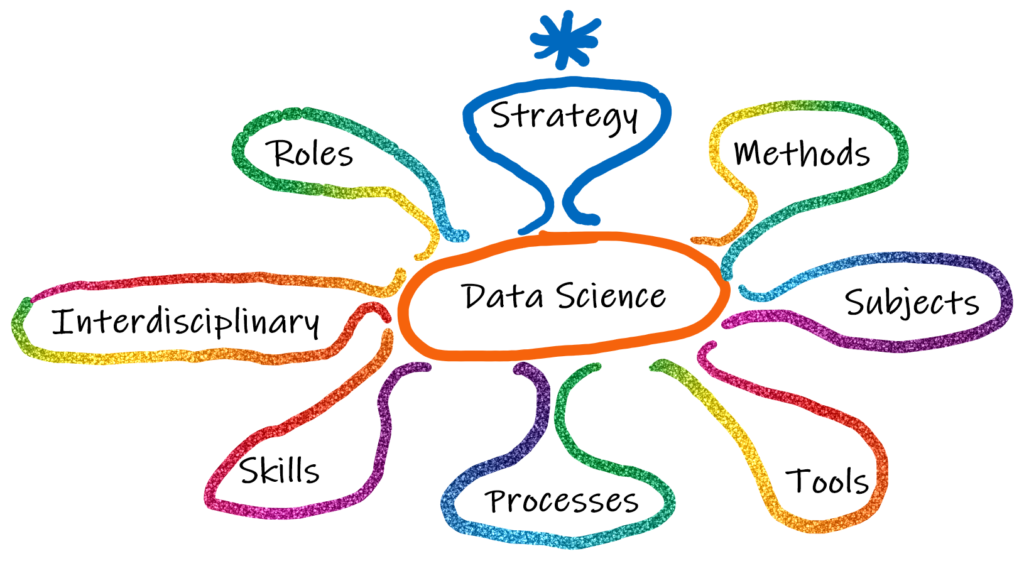

Das Application Lifecycle Management (ALM) in LeanIX ist ein zentraler Bestandteil des Enterprise Architecture Managements (EAM). Es ermöglicht Unternehmen, den gesamten Lebenszyklus ihrer Anwendungen effektiv zu verwalten und zu optimieren. Dieser Prozess umfasst alle Phasen von der Planung und Entwicklung über den Betrieb bis hin zur Ablösung von Applikationen.

LeanIX bietet als EAM-Tool umfangreiche Funktionen, um Application Owner bei der Verwaltung ihrer Anwendungen zu unterstützen. Es ermöglicht eine ganzheitliche Sicht auf die IT-Landschaft und hilft dabei, Abhängigkeiten, Risiken und Optimierungspotenziale zu identifizieren.

In diesem Blog werden wir zunächst die Bedeutung des ALM für Application Owner erläutern und anschließend konkrete Verbesserungsvorschläge für die Umsetzung in LeanIX präsentieren. Ziel ist es, die Effizienz und Effektivität des Application Lifecycle Managements zu steigern und somit einen größeren Mehrwert für das Unternehmen zu schaffen.

Sensibilisierung der Application Owner

Um Application Owner, die mehrere Applikationen verantworten und der Meinung sind, dass sie LeanIX nicht benötigen, von der Wichtigkeit von EAM im allgemeines und des Tools im besonderen zu überzeugen, können folgende Testfragen mit Fokus auf Architektur, Prozesse und Daten gestellt werden:

a) Architektur-bezogene Fragen:

- Wie schnell können Sie herausfinden, welche Ihrer Applikationen von einer geplanten Infrastrukturänderung betroffen wären?

- Welche Ihrer Applikationen nutzen veraltete Technologien und müssen in naher Zukunft modernisiert werden?

b) Prozess-bezogene Fragen:

- Wie würden Sie den Einfluss einer Ihrer Applikationen auf die gesamte Wertschöpfungskette des Unternehmens beschreiben?

- Bei einem Ausfall einer Ihrer Applikationen: Wie schnell können Sie alle betroffenen Geschäftsprozesse identifizieren?

c) Daten-bezogene Fragen:

- Können Sie für jede Ihrer Applikationen die verarbeiteten Datenentitäten und deren Datenflüsse skizzieren?

- Können Sie ad hoc angeben, welche Ihrer Applikationen personenbezogene Daten verarbeiten und wie diese geschützt werden?

d) Übergreifende Fragen:

- Wie schnell können Sie bei einer Audit-Anfrage alle relevanten Informationen zu Ihren Applikationen zusammenstellen?

- Wie stellen Sie sicher, dass alle Stakeholder stets über den aktuellen Stand und geplante Änderungen Ihrer Applikationen informiert sind?

Verbesserungsvorschläge für Application Lifecycle Management in LeanIX

Um Application Owner bei der Pflege ihrer Applikationen in LeanIX zu unterstützen, die Unternehmensarchitektur stärker am Business auszurichten und den Zusammenhang zum Datenmanagement zu nutzen, schlage ich folgende konkrete Aktivitäten als Diskussionsgrundlage vor:

- Schulungen und Workshops für Application Owner:

- Organisieren Sie regelmäßige Schulungen zu LeanIX und Best Practices

- Führen Sie Workshops durch, die den Zusammenhang zwischen Applikationen, Geschäftsprozessen und Daten verdeutlichen

- Erstellen Sie praxisnahe Leitfäden und Checklisten für die Pflege von Applikationen in LeanIX in einem leicht zugänglichen Werkzeug wie z. B. Confluence

- Erstellen Sie LeanIX-Surveys, über die Application Owner relevante Informationen einfach durch Beantwortung zugeschnittener Fragenkataloge vornehmen können

- Prozessorientierte Modellierung in LeanIX:

- Implementieren Sie eine prozessorientierte Sicht in LeanIX

- Verknüpfen Sie Applikationen mit den unterstützten Geschäftsprozessen

- Visualisieren Sie den Beitrag jeder Applikation zur Wertschöpfungskette

- Integration von Datenmanagement-Aspekten:

- Erweitern Sie das LeanIX-Metamodell um relevante Datenmanagement-Attribute

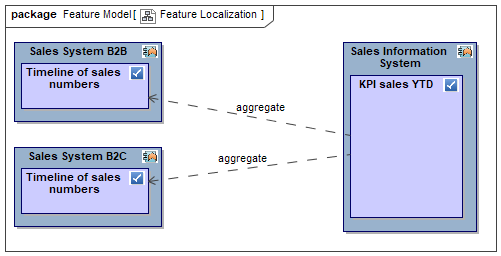

- Verknüpfen Sie Applikationen mit den von ihnen verarbeiteten Datenentitäten

- Implementieren Sie Datenflussdiagramme, die den Zusammenhang zwischen Applikationen und Daten zeigen

- Automatisierung und Integration:

- Implementieren Sie Schnittstellen zwischen LeanIX und anderen relevanten Tools (z.B. BPM, Data Management Platform)

- Automatisieren Sie die Aktualisierung von Basis-Informationen in LeanIX

- Erstellen Sie Dashboards, die den Pflegestatus und die Datenqualität visualisieren

- Governance und Anreize:

- Etablieren Sie klare Verantwortlichkeiten und SLAs für die Pflege von Applikationsinformationen

- Implementieren Sie ein Belohnungssystem für Application Owner, die ihre Daten aktuell halten

- Führen Sie regelmäßige Reviews der Applikationslandschaft durch

- Daten-Governance Integration:

- Verknüpfen Sie Daten-Governance-Rollen (z.B. Data Owner, Data Steward) mit den entsprechenden Applikationen in LeanIX

- Implementieren Sie Attribute für Datenklassifizierung und Datenschutzanforderungen bei Applikationen

- Erstellen Sie Reports, die Daten-Governance-Aspekte über die gesamte Applikationslandschaft hinweg zeigen

- Kontinuierliche Verbesserung:

- Etablieren Sie einen regelmäßigen Feedback-Prozess mit Application Ownern

- Analysieren Sie Nutzungsmuster in LeanIX, um Verbesserungspotenziale zu identifizieren

- Passen Sie das Metamodell und die Prozesse basierend auf dem Feedback kontinuierlich an